About

SAHAS: The Science and Art of Human-AI Systems

Welcome! We are a research group based at Dartmouth College's Department of Computer Science in beautiful Hanover, New Hampshire.

Our group aims to understand and improve human–AI partnerships. While our agenda is domain-general, our current focus spans the testbeds of creativity, healthcare, and human behavior & decision-making.

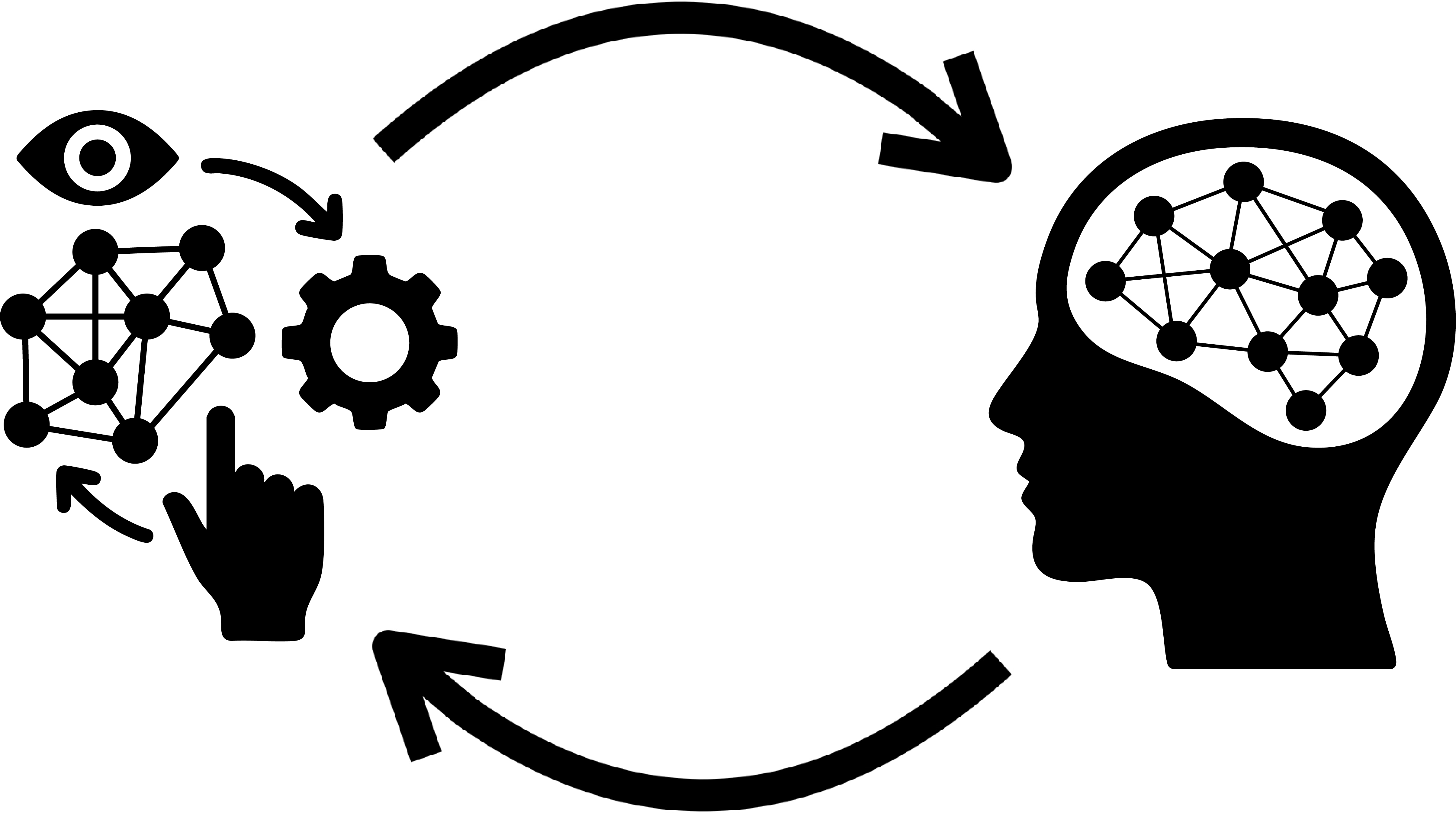

We treat this as both a scientific and a design problem:

Scientifically, we ask: What kinds of capabilities and behaviors make AI systems useful to human minds?

Design-wise, we ask: What designs allow people to effectively steer these systems, build reliable mental models of their behavior, and extend their own cognitive reach?

We call this dual focus the science and art of human-AI systems.

Keywords: Machine Learning, Human-Computer Interaction, Human Behavior, Creativity, Generative Models, Interactive Systems, Interpretability, Steerability, Human-AI Collaboration, Augmented Intelligence, Cognitive Tools, Human-Centered AI, Human-AI Co-Evolution, Human-in-the-Loop Systems

साहस • (sāhas) — stem noun: a complex Hindi word often translated as courage, adventure, intrepidity, daring. More richly, it denotes the enduring moral and psychological resolve to confront uncertainty and act with principled boldness: inner strength, not just outward bravado.

Research Vision

We work across the stack of human-AI systems:

Human-Compatible Capabilities in AI

Hover to expand

What kinds of capabilities must AI systems develop to be useful to people?

Channels for Human-AI Communication

Hover to expand

How can humans meaningfully shape the behavior of complex AI systems?

Designing for Human Benefit

Hover to expand

What mechanisms lead to self-improving human-AI systems over time?